7 minutes

Filling the Proximity Tracing Gap

Tracing apps are all around the news these days. Some countries have actively used them for some time now, others are in the process of launching them, while even others are stuck in various debates.

Consequently, there has been a lot of press about tracing apps. However, I feel like most news sites - even the tech media - have failed to underline some crucial points on how these apps (could) work. Sure, some have noted, maybe even explained them. But many have not, and those that have did not sufficiently stress their importance.

What do I mean by important? Important, in the sense that understanding them will enable you to develop a much better mental model of what tracing apps are. Important, because understanding them enables you to better participate in the current debate on tracing apps.

My goal is to explain the concepts, while striking a balance between too little detail and overwhelming the reader. If you want little detail, stay with the mainstream media. If you want to really dive into the topic, read the various white papers. (Especially if you are studying Computer Science. They are a great case study of security protocols.)

A note before I start:

- I won’t go into the pros and cons of tracing apps and the concerns they raise. I will simply try to explain a couple technical points.

- Whenever I speak of “tracing apps”, I mean tracing apps based on the DP^3T system. It is the one currently being implemented in Switzerland (among other countries). I cannot speak for other approaches.

- Contact tracing or proximity tracing? The Swiss Federal Office for Public Health FOPH as well as Google and Apple (mostly) use the term proximity tracing, so for the sake of consistency I will, too.

- I am going to make simplifications in order to strike a middle ground.

1. What data is uploaded to the server?

TLDR:

- always: random seed

- opt-in: contact event data only for contacts with infected patients

- never: location data, contact event data for unifected contacts

Some terminology: a “contact” is not an entry in your address book, but rather the event of being in close proximity with another person for some time.

Understanding what data is uploaded and why requires a brief explanation of what happens during your use of the app.

In the design described in section 2 of the DP^3T white paper, your app creates a long and random (and thus unguessable) sequence, the so-called seed. That seed is initally secret and only stored on your phone. From that seed a list of so-called ephemeral IDs are derived. Note that the other way around is unfeasible: Given an ephemeral ID you cannot compute the seed. Also note that without knowledge of the seed, you cannot learn whether or not two given IDs come from the same seed, i.e. come from the same user.

While using the app, it sends out these ephemeral IDs, going through the list one-by-one. One ID is broadcasted for some time, then the next, and so on. Regularly changing the IDs is what makes it harder to track you, since for anyone but you, the IDs look random. Nevertheless, your app stores all the other apps’ ephemeral IDs that it receives.

If and only if you are tested positive (and you enter the code the test center gives you), then the app uploads your seed to a central server, usually run by the local health authority. (Then, your app generates a new seed to start fresh.)

One reason for uploading the seed is to save data: the list of ephemeral IDs is huge since you change them regularly. And you do need to change IDs in order to prevent tracking – recall that there is no obvious connection between any two IDs.

The server collects a day’s worth of seeds from newly infected patients. Once a day, your app downloads the list of seeds of infected patients.

Then locally on your phone, your app goes through this list of “infected seeds”. For each seed it computes the corresponding ephemeral IDs, just like the infected user did to send them out. Your app then checks whether it previously “saw” any one of them. Under certain conditions (e.g. if it finds a minimum number of contact events with infected persons) it issues a warning. Thus, only now your app can make sense of the ephemeral IDs that it “met”.

DP^3T also describes a slight variant of the above (white paper section 3). This approach is currently not implemented by Google/Apple/Swiss PT, but it is still interesting enough to be mentioned as an outlook.

In this variant, your app would in the last step not receive a list of “infected seeds” and would not compute all the ephemeral IDs of the infected patients. Here is what happens instead:

The server still receives the seeds from the infected users like before. From them, it builds a so-called Cuckoo filter and sends that to all apps. Your app can check whether an ephemeral ID of another phone that it encountered was generated from a seed that the server put into the Cuckoo filter. Thus it can still issue a warning if needed. However it cannot in practise extract the “infected seeds” from the Cuckoo filter, thanks to the underlying maths.

Furthermore, DP^3T describes that the app can upload data about your contact with infected patients (e.g. when, how long, how often). This is to support the work of epidemiologists. It is purely optional and currently not implemented in the Swiss app.

2. Where do I have privacy?

Privacy in this is many-fold:

Privacy against other users/apps

Privacy against other users is preserved.

Other apps do not learn your identity, since the only link between your random seed and your identity is the code from the test centre. But that is not send to other users, it stays on the server.

Privacy is even stronger in DP^3T’s second variant: here, other users do not even learn the “infected seeds”. They only learn a Cuckoo filter, and from that the only thing that they can learn is whether or not they have a match.

Privacy against the central server

Privacy against the server depends on your situation:

- You never test positive (or if you do, never enter the test centre code). Then no data is sent to the server.

- You test positive and enter the code in the app. Then the server learns:

- that you are infected. Okay, the health authority saw your positive test anyway.

- your seed. Useless on its own though. Only useful to someone who met with you and can check for matching ephemeral IDs.

Crucially however, since the ephemeral IDs of your contacts do not leave your phone, the server is unable to build a social graph, a network of who is meeting whom. The only data your app sends to the server is “I am infected and this is my random sequence” but not “this is who I met”.

3. How are Google and Apple involved?

The Swiss proximity tracing app is going to use the software interface (API) that Google and Apple provide via system updates. What are the implications of this?

The idea behind using the operating systems’s API is to split responsibilities: The OS handles the exchange of ephemeral IDs and the proximity measuring. Since it has much deeper access to Bluetooth, the hope is that the accuracy of the system will be better. The app can then locally query the operating system for the encounters, and handle all the logic for storing and comparing the IDs, communicating with the server, and displaying a pretty UI.

Another advantage arising from the collaboration of the two tech companies is increased compatibility, since they agreed on a common interface. Have you ever tried sending a file via Bluetooth from an Android phone to an iPhone? See what I mean.

Also, both Android and iOS give you control over which app has access to the proximity data, similar to the way you can control permissions.

Note though, that the latest FAQ (as of writing) seems to be very careful not to explicitly say that no data will be shared with Google or Apple. It only talks about not sharing the identities of users with anyone, including Google and Apple.

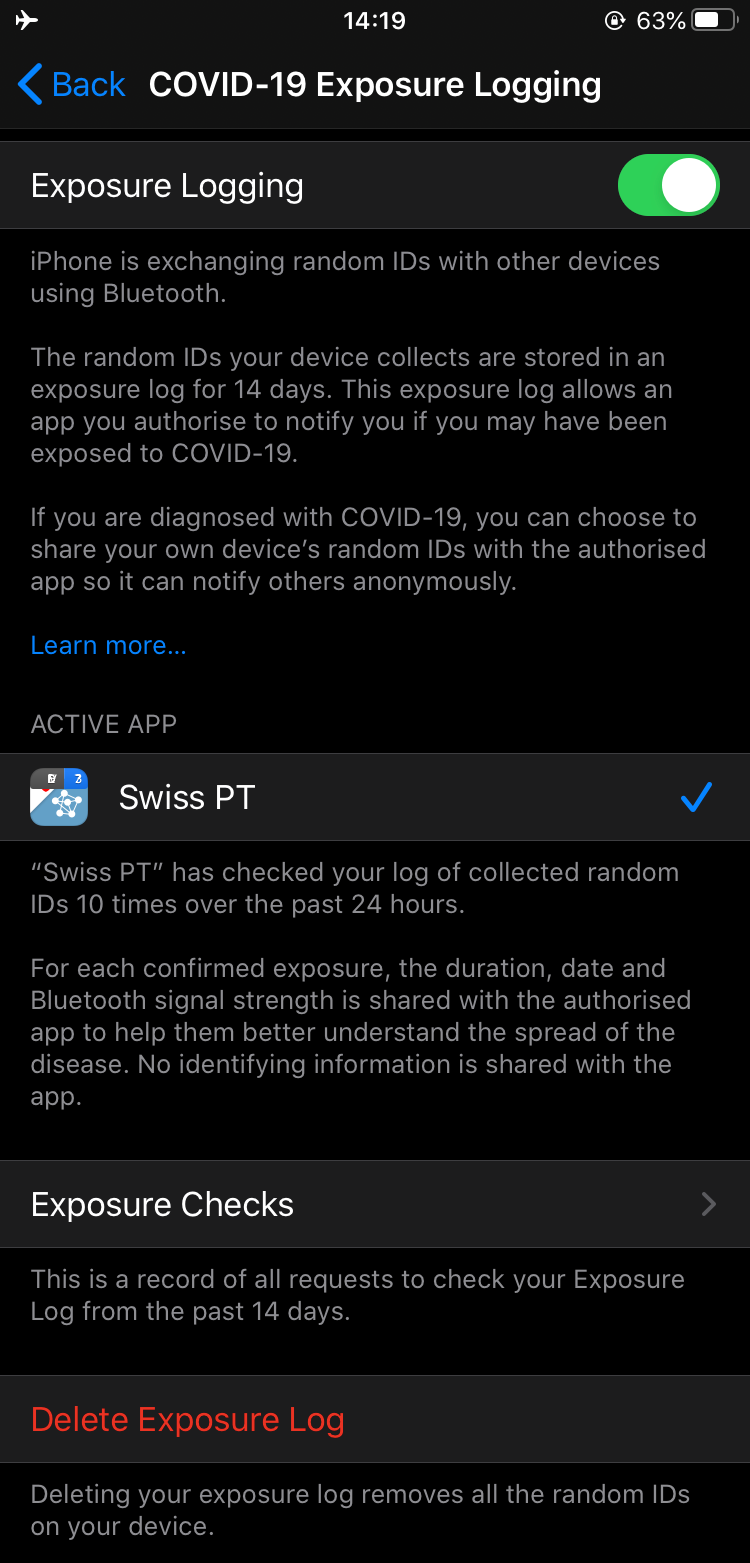

Exposure logging settings in iOS 13.5

Final words

I hope this helps a little bit in understanding conceptually what is going on with these tracing apps.

Yet, in the end, it all comes down to trust. Trust that the implementation really does what the specification says. Trust that the software contains no critical bugs. Trust that the maths and formalisms of the specification are correct. How this trust is earned and lost is a different matter.

Should you find any error, feel free to contact me.

Sources and further reading:

1483 Words

2020-05-21 12:45 +0000