9 minutes

Learnings From Two Student Cluster Competitions

Having taken part in two Student Cluster Competitions now, I want to take the opportunity to take a step back and reflect on what we learnt. That will aid me organise my thought and hopefully will help other teams prepare for the challenge, since with HPC being such a niche field there is not much writing about the cluster competitions out there (though there does exist one book! But I am yet to read it…).

There are three significant Student Cluster Competitions: ASC in Asia, ISC in Frankfurt (Germany) and SC in the US. The last two are paired with supercomputing conferences. The oldest and “original” Supercomputing Conference (SC) takes place since 1988 and is the largest with over 11,000 visitors.

During each conference a Student Cluster Competition takes place: teams of 6 students build a small supercomputer with the help of their advisors and industry partners. They are given a set of benchmarks and real-world applications that they need to run within a power limit of 3 kW. Teams are then judged on the performance of their system as well as interviews with a jury where they need to demonstrate their knowledge of HPC.

I was lucky to be part of RACKlette, the first team from Switzerland to take part in one of these competitions. We first competed at ISC19 in June and now again in November at SC19. Despite being a newcomer we were quite successfull: in Frankfurt we placed third overall and won the LINPACK award and in Denver we placed 5th in a highly competitive field.

In this post, I will outline the main learnings our team had in the hope that it will be helpful to future teams. Among others, I will go over what you should remember to bring with you, some tips on how to tune both your software and hardware and finally what to expect from the competition in general.

What to bring

It’s easy, right? Your local supercomputing centre organises the shipment of the cluster, you bring your laptop, and you are all set.

Unfortunately, that’s far from it. Here is a - most likely incomplete - list of things you should bring to the competition:

Cabling:

- 6 Ethernet cables: each of you needs to connect to the cluster via wire.

- Ethernet-to-USB-C adapters (thank you Tim Apple)

- Multiple socket outlet

- CH-to-US adapter: different sockets!

Monitoring:

- External monitor: Provided at SC but not at ISC, ask CSCS to ship one with the cluster

- HDMI cable

- Raspberry Pi: Setup InfluxDB and Grafana to read out IPMI readings

- Second laptop: Always good if yours fails, plus you can use it do display the Grafana dashboard (the Pi is quite busy running the DB). Alternatively bring your iPad to be more productive with Sidecar (please can somebody build an equivalent for Linux?)

Varia:

- Coffein: Very important at SC where the competition lasts 48 hours non-stop. The organisers also provide coke, but quickly there is only diet coke left, so buy some yourself at the local supermarket.

- Replacement SSD: One team lost their boot drive. It’s unlikely, but if it happens you want to be prepared.

- Paper clip: In case your team mate locked the rack but is now asleep and you need to reboot after a power outage.

- National flag: a Swiss flag always attracts extra love from your human fans, and more people stop by a nicely decorated booth. Looks great if blowing in the wind of the cooling fans.

Tuning applications

Tuning things is what this competition is all about. There are a thousand different means to that end, and thanks to the complexity of modern systems much of it comes down to lots of time invested and trial-and-error. But if you suddenly get a 2x speedup it can feel very worthwhile! (Remember, running inputs naively can take 6ish hours each!)

Other than getting speedup, the other thing to keep in mind is the power limit. You cannot throw 16 Nvidia V100s at it, each consuming 250 W peak power, if the committee’s alarm goes off at 3 kW!

Hardware

First GPUs. When we competed we - like most teams - had Nvidia cards. You can use the CLI tool nvidia-smi to instruct your GPUs to run at a maximum frequency or maximum power. Try both, depending on the task one may be faster or one may result in a more stable power consumption.

Second CPUs. Use cpupower frequency-info -p to read out and cpupower frequency-set to set frequencies. We wrote a script for that to quickly set frequencies across all nodes using pdsh.

And frequency matters! At SC19, for SST Ember the input had ten iterations with each repetitively doing the same kind of work. When running at 2.6 GHz one iterations took 38 min on our hardware. At 2.8 GHz it only took 33 min. For ten iterations that saves you 50 minutes!

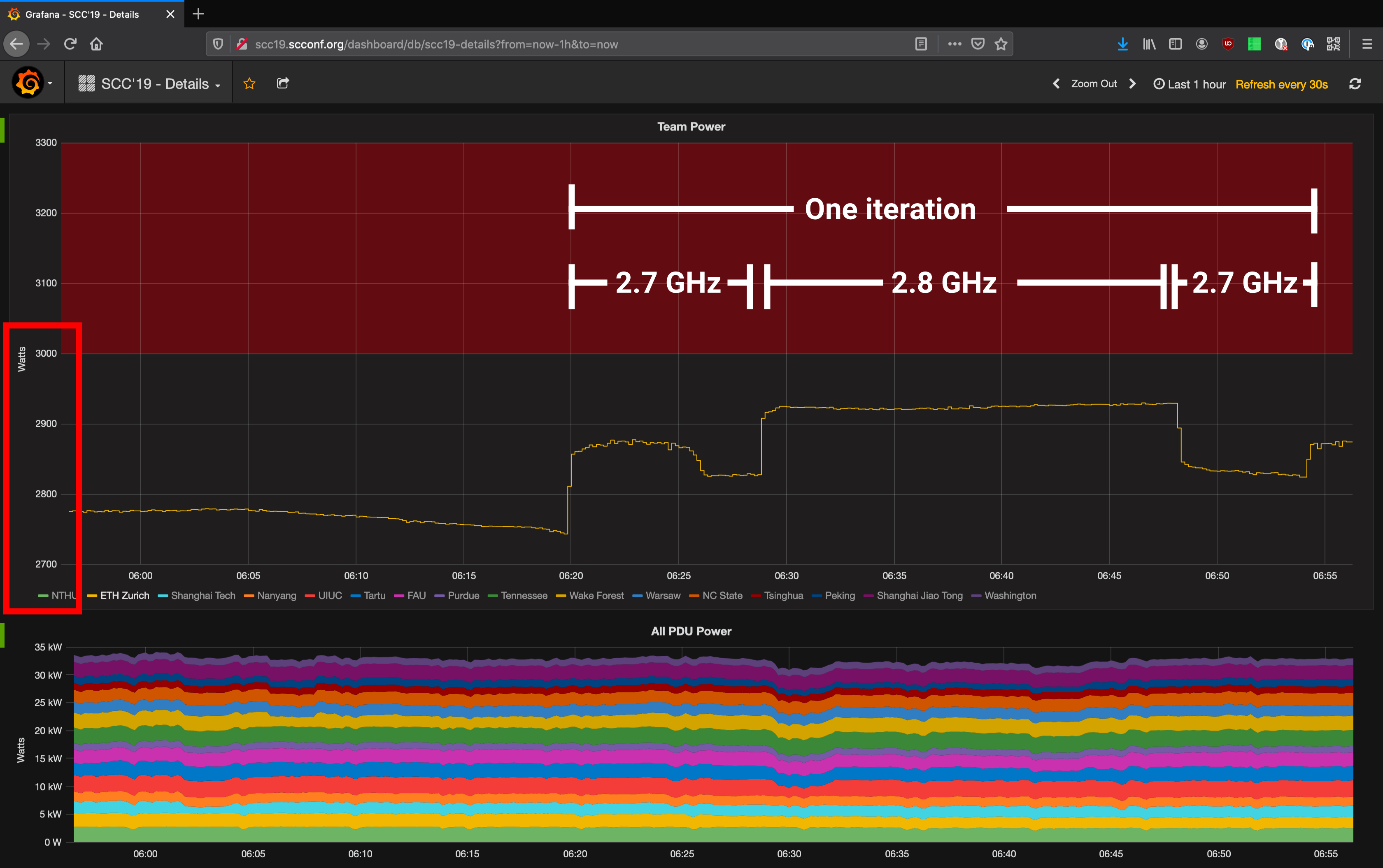

You can also set frequencies manually during a run. (Or automatically, if your so inclined to script it.) One prime example is LINPACK which has a big spike at the beginning and than uses less power if kept capped at the same frequency. In addition, here is an example of us manually adjusting the CPU frequency for SST Ember during SC19 (notice how each iteration has this bump at the beginning, and how close we are driving it to 3 kW):

Also be aware of turbo boost. To avoid sudden unexpected (and unnecessary) spikes over the power limit, you can limit the CPU frequency to something which the CPU can continuously maintain.

And third, cooling. Fans use power, too. During specially important things like LINPACK it is possible (but not necessarily recommended) to slow down the fans via your motherboard’s IPMI. Luckily our Gigabyte motherboards had a webinterface to do that, but there is also a CLI available.

DISCLAIMER: You MUST test this before the competition. If you set the fans too slow and your systems becomes too hot during the run, it becomes unstable, components go into lets-rescue-myself mode and you need to reboot!

Temperature matters too. At SC19 we ran HPCG in the morning just fine, though reproducibly very close below the power limit. When we re-run it in the afternoon, all three attempts spiked over the 3 kW limit (with the exact same configuration, i.e. same inputs, frequencies, fan speeds). We currently acknowledge this to a 1-2°C increase in temperature in the conference hall (because the IPMI was reading a 1-2°C higher CPU temperature, and our cooling fans were at fixed speeds).

Software

Try different compilers: gcc, icc, you name it. -O3 is always good, plus -march=native to tell the compiler it can use vectorisation. Do not overdo it with flags, at some point you will get worse results! Also be aware of flags that icc only has for compatibility reasons: e.g use -xSKYLAKE-AVX512 instead of -march=native.

Also try different MPIs: OpenMPI, IntelMPI, MVAPICH, MPICH. According to their docs MPICH has checkpointing. We only found that out during SC19, but that sounds like something worth looking into. Note that all of them may have slightly different syntax.

Input files

Understand your input files! The organisers - especially at SC - give you inputs that are not optimal and are meant to test your understanding of the application. For example for SST the input we got used --partitioner=linear but there are something like five others to partition the workload.

Or if there are matrices involved think about how much you assign to each MPI rank.

Ideally you had a chance to play with these parameters beforehand and know what to do. However it’s not unheard of that people (that is, I) have found out about other tuning opportunities while the competition was already going on. So don’t be afraid of a blind shot if you think it can reasonably give you more performance!

Varia

Always check the power reading on the provided official PDU! For one, it generally updates in real-time (i.e. every second) while you or the organisers’ Grafana may only update every five to thirty seconds. Our team lost two hours of compute time during 3am and 5am because we were only watching our Grafana, which unfortunately decided to update with outdated data so we didn’t notice our job had finished. So watch the PDU!

The Cluster Competition Experience: On the conference floor

Very exciting, you are finally there at the Supercomputing Conference! You spend a day searching your cluster because it was shipped to a completely different booth somewhere else in the hall, but now you are all set up and ready to go!

Here are some tips:

- Be ready to mingle with the other teams. Everyone is really nice and very outgoing, and chatting about how you optimise applications or what results you got for that input file is completely normal and okay. It great to see more people doing HPC!

- Expect to be interviewed. Not only by the judges, but also by the conference organisers, your sponsors and Dan Olds. Dan publishes articles about HPC (e.g. on HPCwire) and is an incredibly kind, chatty though eloquent and entertaining character. You will notice when you see him! He interviews all teams every year. Because he has been at the cluster competitions since the beginning he knows them inside out.

- At SC (and maybe at ISC in the future?) there is a power outage, planned by the committee. It is meant to test your ability to recover from such an event. This year at SC19 they took everyone by surprise by pulling the power not only once but twice!

- If you can, walk around the conference hall and check out the exhibition. There is cool stuff on display, and sometimes you even get merchandise. Some booths host raffles, so there’s your chance to win a NUC!

Final words

The student cluster competition is an incredible experience. Meeting like-minded students, experiencing the conference itself, learning a thousand things about HPC, computer systems, networking, compiling and application optimisation in the progress makes it a very unique challenge but also opportunity.

Although secretly, it’s only an international sweets exchange really: we brought Swiss chocolate and received sweets from Estonia, Poland and Shanghai in return.

This post also appeared on the RACKlette team blog

1707 Words

2019-11-27 20:50 +0000